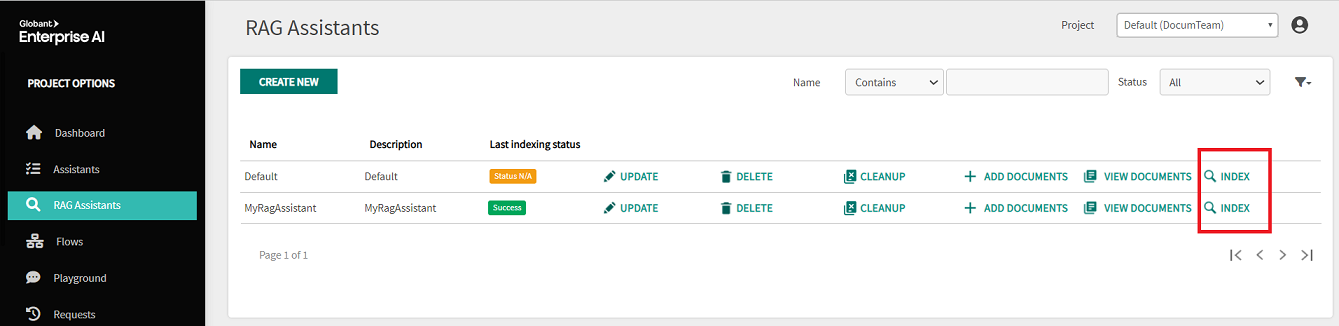

The Index section shows where the documents associated with the RAG Assistant will be stored as a collection of embedded chunks (using the embeddings model configuration).

Each document will be partitioned using a chunking strategy defined by the following parameters:

- Chunk Size: Controls the max size (in terms of number of characters) of the chunk; defaults to 1000 characters.

- Chunk Overlap: How much overlap there should be between two consecutive chunks; defaults to 100 characters.

Note that the text is split into single characters and measured by the number of characters.

Large chunk overlap may cause the same information to be extracted twice.

The maximum value for Chunk Size depends on the selected embeddings model; for example when using the default openai/text-embedding-3-small it's 8191 tokens and you need to convert it to characters, roughly around 32764 characters (considering 1 token = 4 characters).

The bigger the chunk the more general the embeddings, so it may not match specific queries as expected (low score). On the other hand, a small chunk could have a higher score but may miss important and necessary semantics.

If using the Parent Document Retrieval Strategy, the maximum for the Parent Chunk Size is 9999999999 characters while the Child Chunk Size still depends on the embeddings model.

Depending on the target LLM used for answering (those with larger context windows), you can try different sizes of these parameters and check how they work for your documents.

Remember that these strategies have different trade-offs and the best strategy likely depends on the application that you're designing.